Background

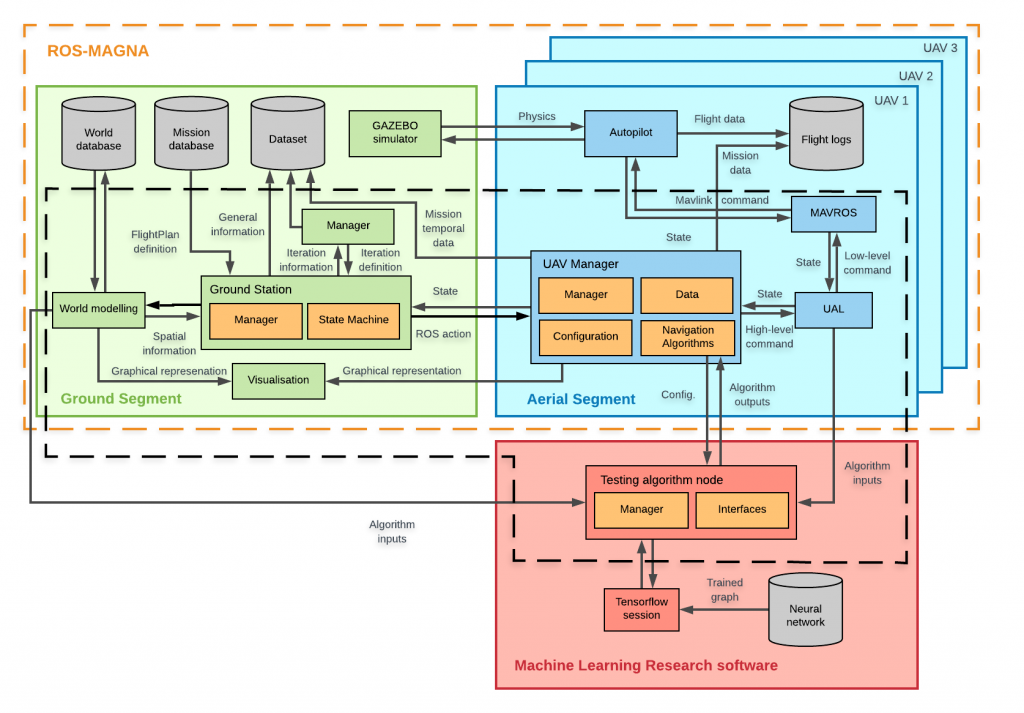

MAGNA was conceived to solve a problem I found when decided to practise ML applied to drones: there was no easy framework to alleviate all work required to prepare a set of simulated cooperative missions, direct testing algorithm integration and easy to modify agent behaviors and environment shapes.

Since that moment, the idea was expanding to accommodate features such as Tensorflow integration or the requirements of the GRVC projects that used MAGNA. Hence, the work done is a hybrid between my Master Thesis and my research work at GRVC. Part of the results of MAGNA were published. Explicative videos here.