Background

Sometimes, the control of a mission must be accomplished while the pilot, or more generally, the commander, is executing any other task at the same time. It means a high loss of efficiency if there is a need to be thinking about a complex coded language, and even been typing it on any graphical interface, while a critical situation that requires quickness and lucidity. With this situation in mind, the goal of this part of the project is to get an abstraction while commanding.

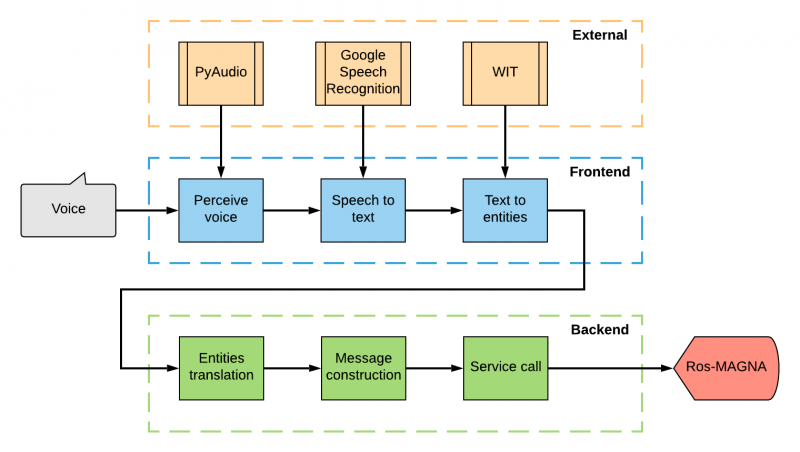

This work is part of the Master Thesis of the Master on Mechanical Engineering. A natural language commanding tool has been developed that may be used via the keyboard on a terminal or simply with the voice. This way, the commander may be in charge of the mission by visualizing its current

state thanks to the visualization tools, described inside the MAGNA part, while commanding via oral.